You've heard the term “language model” all over the tech world especially with tools like ChatGPT, Gemini, and Claude. But what is a language model?

In this guide, we’ll break it down in plain English no math, no jargon just real understanding.

📘 In Simple Terms…

A language model (LM) is an AI system trained to understand and generate human-like text.

It learns:

- How we write

- How sentences flow

- What word comes next (based on what came before)

That’s it! It’s not magic it’s prediction.

🧩 How Does It Work?

Let’s say you type:

“Python is a programming ___”

A language model sees that and predicts the most likely next word:

→ “language.”

Why? Because it’s seen that pattern in millions of examples.

💡 Analogy:

A language model is like autocorrect on steroids but instead of one word, it can complete full thoughts, summaries, or essays.

🧪 Real-World Examples

| Task | What the Language Model Does |

|---|---|

| ChatGPT | Carries a conversation by generating responses |

| Grammarly | Predicts and suggests better sentence structures |

| YouTube captions | Transcribes and interprets speech into text |

| Google Search | Predicts what you’re trying to ask |

| Smart Reply in Gmail | Suggests quick, relevant replies |

🧠 Language Models Learn from Data

They don’t “know” facts like humans do.

They’ve just read tons of text and learned patterns.

Some famous models:

- GPT-4 / ChatGPT (OpenAI)

- Gemini (Google DeepMind)

- Claude (Anthropic)

- LLaMA (Meta)

- BERT (Google NLP)

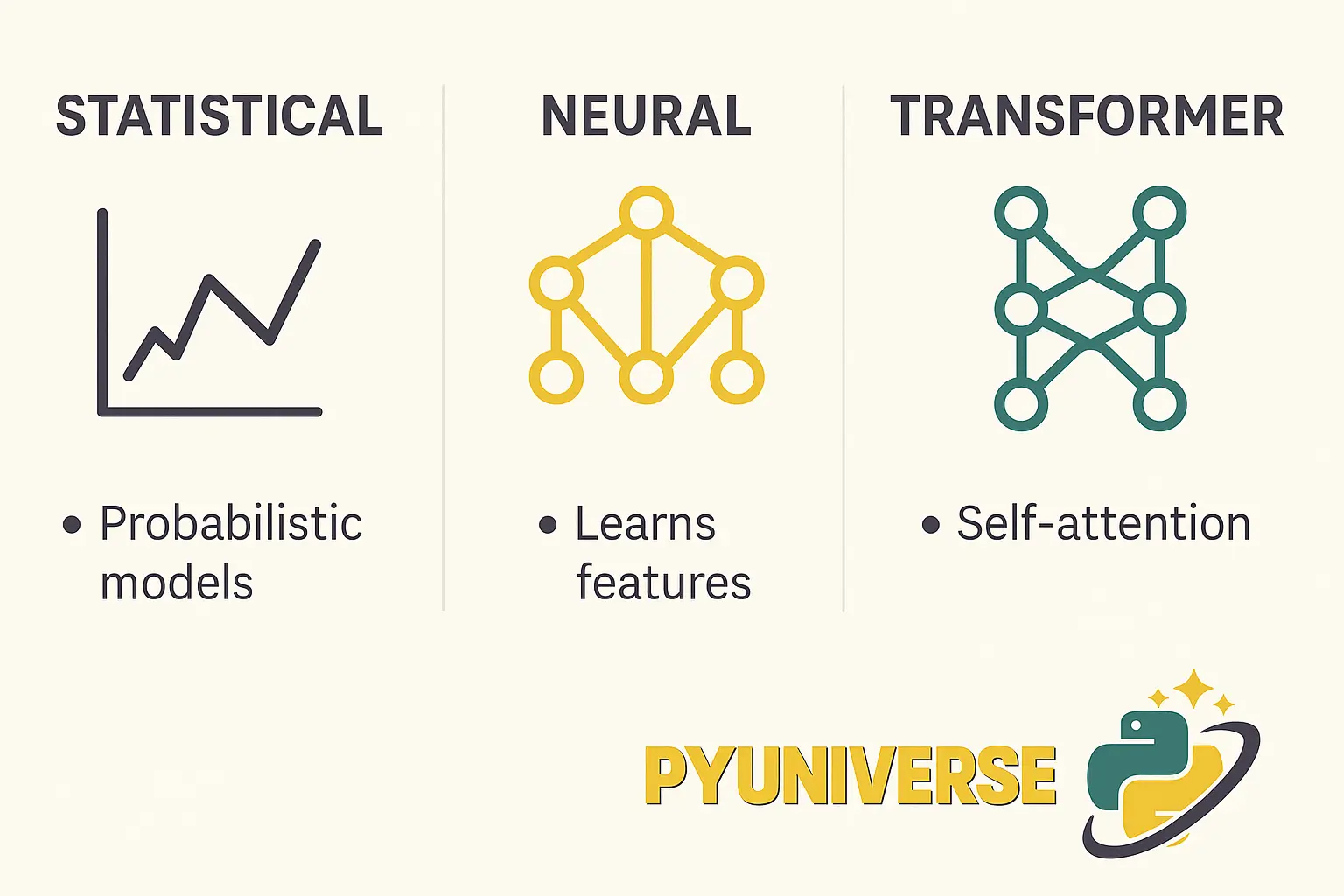

🔍 Types of Language Models

| Type | Description |

|---|---|

| Statistical LM | Early models that used probabilities & word counts |

| Neural LM | Use deep learning to capture complex patterns |

| Transformer LM | Modern standard; powers ChatGPT, Gemini, etc. |

📦 Use Cases (Beyond Chat)

Language models are used for:

- Summarizing articles

- Translating languages

- Answering questions

- Writing emails, resumes, content

- Powering voice assistants

- Writing code (Copilot, Gemini Code Assist)

🔄 Are They Always Right?

Not really.

Language models sometimes:

- “Hallucinate” make up facts

- Repeat biases in training data

- Struggle with logic or long context

That’s why companies are building hybrid models that mix logic + language.

🧠 Final Thought: They Predict, Not Understand

A language model doesn’t understand you it predicts what should come next based on training.

Think of it as autocomplete with a brain.

The results feel smart… because they’re trained on how we talk, write, and think.